Program

The program of interference is designed to be printed out from the browser (Firefox or Iceweasel to obtain the planned outcome, any other browser for unexpected results...).

It is made out of three different parts: a timetable for the event, a collection of all the abstracts of the sessions, and a reader made out of texts submitted by the participants.

Click here to print, or just press CTRL + P.

Remove all default headers and footers in the print dialog and please be patient. as rendering all the pages of the reader together can take more than a minute.

15.08 11:00 Brunch –

|

|---|

15.08 12:00 Hello –

|

|---|

|

Interference

Callout

|

|

Interference, n: Interference is not a hacker conference. From a threat to the so-called national security, hacking has become an instrument for reinforcing the status quo. Fed up with yet another recuperation, the aim is to re/contextualize hacking as a conflictual praxis and release it from its technofetishist boundaries. Bypassing the cultural filters, Interference wants to take the technical expertise of the hacking scene out of its isolation to place it within the broader perspective of the societal structures it shapes and is part of. Interference tries not to define itself. Interference challenges hacker's identity, the internal dynamics of hackerculture and its ethical values. It undermines given identities and rejects given definitions. Interference is a hacking event from an anarchist perspective: it doesn't seek for uniformity on the level of skills or interests, but rather focuses on a shared basis of intuitive resistance and critical attitude towards the techno-social apparatus. Interference is three days of exploring modes of combining theory and practice, breaking and (re)inventing systems and networks, and playing with the art and politics of everyday life. Topics may or may not include philosophy of technology, spectacle, communication guerrilla, temporary autonomous zones, cybernetics, bureaucratic exploits, the illusions of liberating technologies, speculative software, the creative capitalism joke, the maker society and its enemies, hidden- and self- censorship, and the refusal of the binarity of gender, life, and logic. Interference welcomes discordians, intervention artists, artificial lifeforms, digital alchemists, oppressed droids, luddite hackers and critical engineers to diverge from the existent, dance with fire-spitting robots, hack the urban environment, break locks, perform ternary voodoo, decentralise and disconnect networks, explore the potential of noise, build botnets, and party all night. The event is intended to be as self-organised as possible which means you are invited to contribute on your own initiative with your skills and interests. Bring your talk, workshop, debate, performance, opinion, installation, project, critique, the things you're interested in, the things you want to discuss. Especially those not listed above. by the way, text is full of academic/NGO talking, you must concentrate to follow it. some people don't have contact with low educated people and they are excluded from society. NGO people are in NGO sector (with high salaries) and they are separated from society. They will never make revolution, they enjoy in capitalism. |

15.08

13:00 |

|---|

15.08 14:00 Transparency critiques – Lonneke van der Velden

|

|---|

15.08 15:00 The DCP Bay – taziden

|

|---|

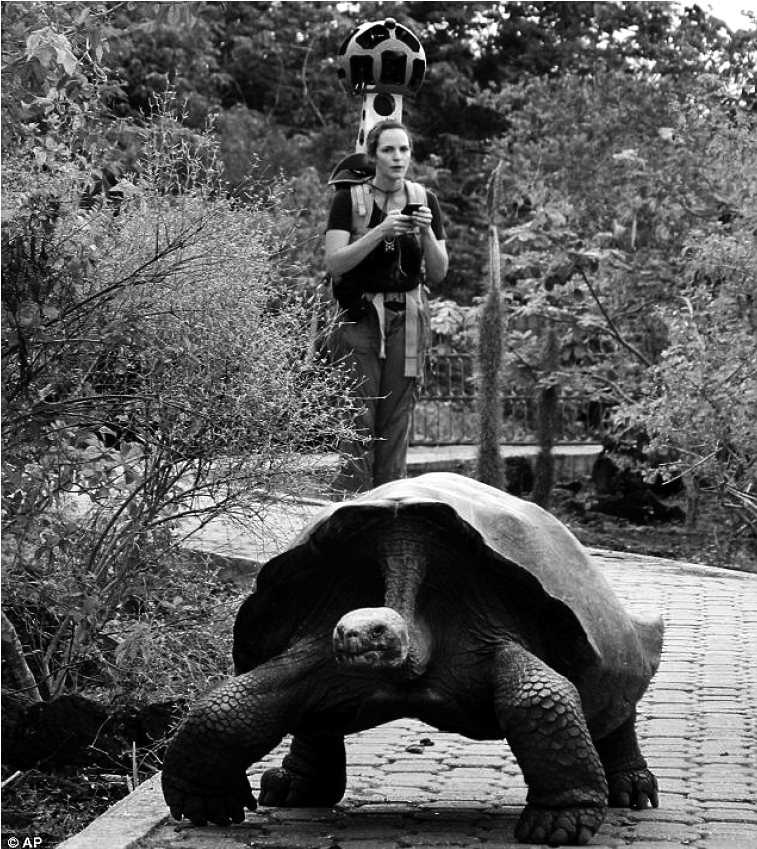

15.08 16:00 The Map is not the Territory: future interactions in hyperspace – A, N and D collective

|

|---|

|

A,N and D collective

The map is not the

territory Future interactions

in hyperspace |

|

15.08 16:00 Scaling the revolution – Lunar

|

|---|

15.08 17:00 The Map is not the Territory: future interactions in hyperspace – A, N and D collective

|

|---|

15.08 17:00 Novelty against sovereignty – Johan Söderberg

|

|---|

|

Johan Söderberg

Legal Highs and Piracy

Labouring in the

legal grayzone |

IntroductionThe economy of drug trafficking holds up a mirror image to the official economy (Ruggieroand & South, 1997). It can therefore help us to catch sight of phenomena which have grown too familiar or is clouded behind euphemisms. For instance, the organisation of local drug trafficking in the Baltimor district in the US in the 1990s closely resembled an idealised notion of a ”cottage industry” (Eck & Gersh, 2000). Likewise, the wheeling-and-dealing pusher can be said to personify the entrepreneurial subject avant-la-lettre (South, 2004). This goes to suggest that one can learn much about how how officially recognised, white markets operate by studying illegal drug markets. In a similar vein, I call upon legal highs to throw a new light on a phenomenon that has variously been labelled ”open innovation” (Chesbrough, 2003), democratisation of innovation (von Hippel, 2005), or ”research in the wild” (Callon & Rabeharisoa, 2003). Although the scholars mentioned above belong to different intellectual traditions and their objectives diverge a great deal, they are all trying to encircle the same phenomenon. The object of study is an economy where the tools and know-how to innovate have been dispersed beyond the confines of firms and state institutions, and, subsequently, beyond the confines of experts and professionals. All the aforementioned scholars describe a trend which they consider on average to be benevolent. The relation between firm and user is assumed to be consensual and cooperative. As a consequence, with the exception of licensing regimes and intellectual property rights, questions about regulation and law enforcement have rarely been evoked in relation to ‘open innovation’ or lay expertise (cf. Söderberg, 2010). Moving away from mainstream academics writing to more activist thinking about the subject, such as, for instance, the idea about a peer-to-peer society, it remains the case that the role of the state and the law in this transition has been given little reflection. This lacuna is coherent with the underlying hope that the state will fall apart when users withdraw from it in autonomous practices, such as darknets, crypto-currences, etc. A new light falls on these assumptions when the users in question are tweaking molecule structures for the sake of circumventing legal definitions. The state and the law are not absent, but constitutive, of these practices, even as the users seek to avoid its gravitational field. The purpose of this paper is to present an empirical case which compels us to adopt a more antagonistic perspective on the market economy, thus mandating a different theoretical apparatus than the ones now commonly drawn upon in studies of open innovation and/or user innovation. In order to foreground aspects of antagonism and contradiction in the regulation of (open) innovation processes, I point to Carl Schmitt, the infamous legal theorist in Nazi-Germany, and two of his contemporary critics, Franz Neumann and Otto Kirchheimer. The latter, associates of the Frankfurt School and emigrants, anchored their reflections over law and legal order in transformations of the economy. Like them, my inquiry into the regulation of legal highs fall back on an analysis of the economy. By making a comparison with activists and entrepreneurs developing filesharing tools, typically with the intent of violating copyright law, I hope to demonstrate that legal highs is not a stand-alone case. Rather, it gives indication of contradictions at the heart of an economy centred on fostering innovation and ‘creative destruction’. Brief overview of legal highsThe defining trait of ‘legal highs’ is that the substance has not yet been defined in law as a controlled substance. Hence the production, possession and sale of the substance is not subject to law enforcement. Everything hinges on timing and novelty. When a substance has been prohibited, a small change of the molecule structure might suffice to circumvent the legal definition. What kind of changes are required depends on the legal procedures in the country in question. A recurrent finding in Innovation Studies is that lead users often are ahead of firms in discovering new products and emerging markets. Quite so, for decades, legal highs was a marginal phenomenon chiefly engaged in by a subculture of ”psychonauts”. Pioneers in underground chemistry like Nicholas Sand started in the 1960s to synthesise DMT and LSD, and they have since been followed by generations of aspiring chemist students. However, again confirming a wisdom from Innovation Studies, instances of on-off innovation by individuals for the sake of satisfying intellectual curiosity, personal consumption habits, or an urge to win recognition from ones peers, takes on a different significance when the market grows bigger. Some of the chemistry students decided at one point to become full-time entrepreneurs, producing drugs not primarily for use but for sale. A major inflow came with the rave scene in the UK in the 1980s and early 1990s (McKay, 1994). The clampdawn on ecstasy use triggered the quest for novel substances among a larger section of the population. Initially, information about how to synthesise or extract substances were first disseminated in fanzines such as Journal of Psychodelic Drugs, High Times and The Enthogen Review, to mention three of the most renowned, and reached an audience of a few thousands of readers. With the spread of the Internet in the 1990s, information about fungus and herbs from all the corners of the world could be broadcast to a global audience. Thanks to the legally uncertain status of legal highs, the products can be advertised and sold by webshops that are shiped internationally. According to a recent survey, more than half of the shops were registered in UK, and more that a third in the Netherlands (Hillebrand, Olszewski & Sedefov, 2010). In Ireland, drugs were sold in brick-and-mortar retail stores, so-called ‘head shops’, until a law was passed in 2010 banning this practice (Ryall & Butler, 2011). Globalisation has reshaped this market like any other. Most synthetic substances today are believed to have been produced in China, and, to a lesser extent, in India (Vardakou, Pistos, Spiliopoulou, 2011). To provide an exhaustive taxonomy of something as ephemeral as legal highs is self-defeating from the start. To get a rough overview of the phenomenon under discussion, however, some highlights need to be given. A major group of legal highs classifies as synthetic cathinones. The source of inspiration comes from Khat, a plant traditionally used in East African countries. One derivative of this substance that has made it to the headlines is mephedrone. The first known instance of its use was in 2007 but it became widespread in 2009, in response to a new legislation in the UK that banned some other designer drugs. Subsequently, mephedrone was banned in UK in 2010, as well as in Netherlands and the Nordic countries (Winstock et al., 2010). Just a few months later, however, a new synthetic cathinone called Naphyrone took its place (Vardakou , et al., 2011). Another major class of drugs are synthetic cannabinoids. On the street they go under the name ”Spice” and are marketed as a legal alternative to marijuana. The synthetic extract of cannabis has been sprayed on herbal leaves. It took a long time for drug prevention authorities to realise that the active substance did not stem from the plant mixture but from added chemicals. In fact, it appears as some of the chemicals have been added to the compound simply to lead researchers astray and avoid detection (Griffiths, et al., 2010). Piperazines, finally, have effects that are said to mimic ecstacy. One version of this substance, 1-benzylpiperazine (BZP) became a celebrity cause after New Zealand recognised its legal status. From 2005 till 2008, it was permitted to sell BZP if some restrictions on advertisement and age limits were respected. The drug could be obtained from all kinds of outlets—corner shops, petrol stations and conveniences stores (Sheridan & Butler, 2010) Drugs and definitionsDefinitions were always key in discussions about drugs and addiction. The ambiguities start with the binary separation between legal drugs (tobacco, alcohol, pharmaceuticals) and illegal ones. It has often been commented on that the harm caused by a drug only in a remote way relates to the legal status of that substance. All the major drugs, opium, cocaine, cannabis and amphetamine, were initially considered to be therapeutic and still have some medical uses. Consequently, the intoxicating effects of a drug itself do not give an exhaustive answer to the question why it has been banned. Conventions, public perceptions and entrenched interests carry a heavy weight in defining what belongs to the right or the wrong side of the law. The centrality of definitions in this discussion is old hat among the scholars studying misuse and addiction (Klaue, 1999; Derrida, 2003). However, in the case of legal highs, the inherent limitations of definitions and language take on a heightened importance. For instance, to avoid health and safety regulations, the drugs are often labelled ‘research chemicals’ or ‘bath salt’ and the containers carries the warning ”not for human consumption”. The drawback with this strategy is that no instructions can be given on the container about dosages or how to minimise risks when administrating the drug. Legal highs thrives on phony definitions that reflect an ambiguity in the law as such. Ultimately, what legal highs points to is the limits and contradictions of modern sovereignty in one of its incarnations, the rule of law. At the heart of the legal order lies a mismatch between, on the one hand, general and universal concepts of rights, and, on the other, the singularity in which those rights must be defined and enforced. Examples abound of how this gap can be exploited to turn the law against itself. Tax evasion and off-shore banking comes to mind as examples from an altogether different field. This is to say that the case with legal highs is not exceptional. Nor is it novel. Perhaps the urge to play out the letter against the spirit of the law and find loopholes is as old as the law’s origin in divine commandments. However, if the aim is to escape prosecution by the state, then the effectiveness of such practices presupposes a society bound by the rule of law. Rule-bending preys on the formalistic character of the law, which is specific to the secular, democratic and liberal society. Some core principles of the rule of law are as follows: The effects of a new law may only take place after the law has been passed. The law must be made known to the subjects that are ruled by it. What counts as a violation of the law must be clearly defined, as must the degree of enforcement and the punitive measures that is merited by a violation. In addition to the principles for how laws must be formulated, considerable time-lags are imposed by the recognised, democratic process for passing laws. The original 1961 United Nation Single Convention on Narcotic Drugs laid down that unauthorized trade in a controlled substance should be made a criminal offence in signatory countries. It included a list of substances that were from now on to be held as illegal. Ten years later, more substances had been identified as problematic and were added to the list drawn up in the Convention on Psychotropic Substances. In the last years, however, the number of intoxicating and psychoactive substances is snowballing. According to the annual report by European Monitoring Centre for Drugs and Drug Addiction, there were almost one new substance discovered every week during 2011, and the trend is pointing steadily upward (ECNN, 2012). It has become untenable to proceed along the default option of classifying each new substance individually. There is a wide variation in European countries how long time it takes to make a drug controlled (from a few weeks to more than a year), depending on what legislative procedures are required. The time-lag in the different jurisdictions are made the most of by the web-shops selling legal highs to the ”EU common market”. Hence, pressure is building up for changing the procedures by which new substances are classified. The ordinary, parliamentary route of passing laws needs to be sidestepped if legislators are to keep pace with developments on the field. Already in 1986, United States introduced an analogue clause that by default includes substances said to be structurally similar to classified substances (Kau, 2008). Everything hinges on what is meant with ”similar”, an ambiguity that has never found an adequate answer. A middle road between individually listed substances and the unspecified definitions in the US analogue act was pioneered by UK, and has as of late been followed in many other countries, where control measures are extended to a clusters of modifications of a classified molecule. Other countries have hesitated to follow suit, out of fear of introducing too much ambiguity in a law which carry heavy penalties and extensive, investigative powers (EMCCDA, 2009). An alternative route has been to introduce fast-track systems whereby individual substances can be temporarily listed in a few weeks only, without requiring advice from a scientific board, as has typically been required in normal listing procedures. Another practice that has developed more or less spontaneously is that middle-range public authorities use consumer safety and/or medicine regulation in creative ways to prevent the sale of drugs that have not yet been listed (Hughes & Winstock, 2011). The fact that legal highs are not prohibited in law might give the impression that such drugs are less dangerous than known and illegal substances. The case is often the opposite. The toxicity of amphetamine, cocaine etc. are well known to medical experts. One doctor specialised in aesthetics told me that he receives almost one patient a week at his hospital in Göteborg, Sweden, unconscious from the intake of a novel substance. It is hard to treat those cases as the chemical content is unknown to the medical expertise. In 2012, for instance, it was reported in Swedish media that 14 people had died from just one drug, called 5-IT. There is a tendency that less dangerous, hence, more popular, old and novel substances, are banned, as they quickly come to the attention of public authorities, pushing users to take ever newer and more risky, but unclassified, substances. A theoretical excursion: the sovereign and lawWhile the medical risks of legal highs are easy enough to appreciate, other kinds of risk stem from the responses by legislators and law enforcement agencies. The collateral damage of international drug prevention have been thoroughly documented by scholars in the field. Especially in developing countries, the war on drugs have contributed to human rights abuses, corruption, political instability, and the list goes on and on (Barrett, 2010). Given this shoddy history, it is merited to ask what negative consequences a ‘war on legal highs’ might bring. Almost every country in the European Union have revised their laws on drug prevention in the last couple of years, or are in the process of doing so, in direct response to the surge of legal highs. The laws need to be made more agile to keep pace with the innovativeness of users and organised crime. Otherwise, law enforcement will be rendered toothless. Such flexibility is bought at a high price though. The constraints and time-lags are part and parcel of the rule of law of liberal societies, which, however imperfectly they are upheld, are arguably preferable to the arbitrariness of law enforcement in a police state. It is in this light that it becomes relevant to recall Carl Schmitt’s reflections over the sovereign, alluded to in the introduction of the paper. Schmitt identified the punitive system as the nexus where the self-image of pluralistic, liberal democracy has to face its own contradictions. Thus he called attention to the fact that peaceful deliberation always presupposes the violent suppression of hostile elements. The delimitation of the violence monopoly of the state laid down by the rule of law is, at the end of the day, limits that the sovereign chooses to impose on himself (or not) (Schmitt, 2007; Zizek, 1999). Carl Schmitt’s radical challenge to the liberal and formalist legal tradition has been extensively commented on in recent years. Many thinkers on the left are attracted to his ideas as an antidote to what they consider to be an appeasing, post-political self-understanding in liberal societies (Mouffe, 2005). Here I am less interested in present-day appropriations of Carl Schmitt’s thinking, than to use him as an entry point to discuss the works of two of his contemporary critics, Franz Neumann and Otto Kirchheimer, both associates of the Frankfurth School. They lived through the same convulsions of the Weimar Republic as Carl Schmitt did, but drew very different conclusions from that experience. Before saying anything more, let me first make clear that I am not comparing the historical situation in Germany during the 1930s and the current one, a claim that would be hyperbolic and gravely misleading. What interests me with their writings is that they anchored the rule of law in a transformation of the capitalist economy. In a ground-breaking essay, Franz Neumann argued that formalistic modes of law and legal reasoning had enjoyed broad support from privileged business interests in an era of competitive capitalism, epitomised by nineteenth century Britain. Competing firms wanted the state to act as an honest broker. Neumann did not take the self-descriptions of the rule of law at face value. He knew full well that law did not apply equally to all the subjects of the land. Still, he also recognised that subjugated groups had something to loose if this pretence was given up. As a labour organiser in the Weimar Republic, he had seen first-hand how the German business elite begun to cede their commitments to strict, clear, public and prospective forms of general law. Neumann explained this change of heart with an ongoing transition from competitive to monopolistic capitalism. Monopolies did not rely on the state as a broker. Rather, universally applicable laws was perceived as an encumbrance and a source of inflexibility (Neumann, 1996). If Neumann’s reasoning is pushed too far, it turns into crude economism. It is merited to bring him up, nonetheless, because his ideas provide a missing piece of the puzzle to discussions about open innovation. Recently, William Scheuerman defended the actuality of Neumann’s thinking on law in light of globalisation. Multinational companies do not depend on national legislation to the same extent as before, while national parliaments are struggling to keep ahead and passings laws in response to developments in financial trading and global markets (Scheuerman, 2001). If the word “globalisation” is replaced with “innovation”, then Scheuerman’s argument concurs with the case I am trying to make here. The actors involved in developing legal highs stand as examples of how the speed of innovation places demands on legislators and parliaments that are difficult to reconcile with the principles of rule of law and democratic decision making. Furthermore, the example with legal highs should not be understood as an isolated phenomenon. As I will argue in the next paragraph, legal highs is indicative of broader transformations in an economy mandating innovation and technological change, including, crucially, open innovation. It can be worthwhile to recall that in 1950, Carl Schmitt too expressed concerns about how the legal system would cope with the acceleration of society, which he claimed to see evidence of on all fronts. He divined that this would result in a ‘motorization of law’: “Since 1914 all major historical events and developments in every European country have contributed to making the process of legislation ever faster and more summary, the path to realizing legal regulation ever shorter, and the role of legal science ever smaller.” (Schmitt, 2009, p.65) Innovation: the last fronteerThe ongoing efforts to circumvent legal definitions is carried out through innovation. The property looked for in a novel string of molecules or a new family of plants is the quality of not having been classified by regulators. Innovation is here turned into a game of not-yet and relative time-lags. Legislation is the planet which this frenetic activity gravitates around. This contrasts sharply with the mainstream discourse about the relation between legal institutions and innovation. It is here assumed that the institutions of law and contractual agreement serve to foster innovative firms by providing stability and predictability for investors (Waarden, 2001). However, a closer examination will reveal that legal highs are not such an odd-one-out after all. A lot of the innovation going on in corporate R&D departments is geared towards circumventing one specific kind of legal definition, that is to say, patents. The drive to increase productivity, lower costs and creating new markets are only part of the history of innovation. As important is the drive to invent new ways to achieve the same-same old thing, simply for the sake of avoiding a legal entitlement held by a competitor. Perhaps this merits a third category in the taxonomy of innovations, besides radical and incremental innovations, which I elect to call ”phony innovations”. Note to be taken, I do not intend this term to be derogative. What ”phony” refers to is something specific: innovation that aims to get as close as possible to a pre-existing function or effect, while being at variance with how that function is defined and described in a legal text. A case in point is naphyrone, made to simulate the experience that user previously had with mephedrone. To regulate innovation, something non-existent and unknown must be made to conform with what already exists: the instituted, formalised and rule-bound. Activist-minded members of the “psychonaut” subculture, as well as entrepreneurs selling legal highs, seize on this opportunity to circumvent state regulation, which they tend to perceive as a hostile, external force. The image of an underdog inventor who outsmarts an illegitimate state power through technical ingenuity is a trope, which seamlessly extends to engineering subcultures, engaged in publicly accepted activities, such as building wireless community networks (Söderberg, 2011) and open source 3D printing (Söderberg, 2014). In a time when the whole of Earth has been mapped out and fenced in by nation states, often with science as a handmaid, innovation and science turns out to be the final frontier, just out of reach from the instituted. In popular writings about science in the ordinary press, science is portrayed as the inexhaustible continent, offering land-grabs for everyone, this time without any native indians being expropriated from their lands. The colonial and scientistic undertones of such rhetoric hardly needs to be pointed out. What is more interesting to note is that the frontier rhetoric also calls to mind folktales about the outlaw (and, occasionally, a social bandit or two, in Hobsbawn’s sense of the word). Science and innovation is the last hide-out from the sheriff, as it were. The official recognition granted to this cultural imagination is suggested by the term “shanzhai innovation”. Shanzai used to describe marshlands in China where bandits evaded state authorities, but nowadays it designates product innovations made by small manufacturers of counterfeited goods, which then creates new and legal. Laying in the tangent of open innovation, shanzai innovation too has become a buzz word among buisiness leaders and policy makers (Lindtner & Li, 2012). Twenty years ago, the no-mans-land of innovation found a permanent address, ”cyberspace”. John Perry Barlow declared the independence of cyberspace vis-à-vis the governments of the industrial world. These days, of course, cyberspace enjoys as much independence from states as an encircled, indian reservation. That being said, temporality is built-in to the frontier notion from the start. New windows open up as the old ones are closed down. Nonetheless, a red thread connects the subcultures dedicated to cryptography, filesharing, crypto-currencies (such as BitCoin) etc., all of them thriving on the Internet, on the one hand, and the psychonaut subculture experimenting with legal highs, on the other. Both technologies where pioneered by the same cluster of people, stemming from the same 1960s American counter-culture. Hence, the subcultures associated with either technology have inherited many of the same tropes. A case in point is the shared hostility towards state authority and state intervention, experienced as an unjust restriction of the freedom of the individual. There is also a practical link, as the surge of legal highs would be unthinkable without the Internet. Discussion forums are key for sharing instructions for how to administrate a drug, while reviews of retailers and novel substances on the Internet provide a minimum of consumer power (Walsh & Phil, 2011; Thoër & Millerand, 2012). More to the point, the crypto-anarchic subculture and the psychonaut subculture both put in relief the troubled relation between innovation and law. The relentless search for unclassified drugs is mirrored in the creation of new filesharing protocols aimed at circumventing copyright law. To take one well-known example out of the hat: the Swedish court case against one of the worlds largest filesharing sites, the Pirate Bay (Andersson, 2011). Swedish and international copyright law specifies that an offence consists in the unauthorised dissemination of a file containing copyrighted material. Strictly speaking, however, not a single file was up- or downloaded on the Pirate Bay site that violated this definition of copyright law. The website only contained links to files that had been broken up into a torrent of thousands of fragments scattered all over the network. This qualifies as a ”phony innovation” in the sense defined above, because when the fragments are combined by the end-user/computer, an effect is produced on the screen (an image, a sound, etc.) indistinguishable from that which would have happened, had a single file been transmitted to the user. Technically speaking, the Pirate Bay provided a search engine service similar to Google. Such technical niceties creates a dilemma for the entire juridical system: either stick with legal definitions and risk having the court procedure grinding to halt, or arbitrarily override the principles of rule-of-law. Labouring in the legal no-mans-landThe case with legal highs is exceptional on at least one account, in that governments try hard to prevent innovation from happening in this field. When those efforts fail, the response from policy makers and legislators has been to try again. Changes are introduced in legislative procedures at an accelerating rate, international cooperation is strengthened, and more power is invested in law enforcement agencies. This stand in contrast to most other cases of innovation, even potentially dangerous ones, where public authorities and regulators tend to adopt a laissez-faire attitude. The underlying assumption is that whatever unfortunate side-effects a new technology might bring with it, unemployment, health risks, environmental degradation, etc., it is due to a technical imperfection, something that can be set right through more innovation. This argument, only occasionally confirmed in experience, has purchaise, because ultimately bracketted up with the imperative to stay competitive in global markets. Everyone must bow to this imperative, be it a worker, a firm or a nation state. Subsequently, firms are forced by competition to follow after the most innovative lead-users, no matter where it leads. This is the official story, told and retold by heaps of Innovation Studies scholars, but there is a twist to the tale. Some lead users find themselves on the wrong side of the law, or at least in a grey-zone in-between legality and illegality. The important point to stress here is that, even if the motives of the delinquent innovator are despicable and self-serving, judged by society’s own standards, he or she is also very, very productive. The appropriation of filesharing methods by the culture industry is a pointer. The distributed method for storing and indexing files in a peer-to-peer network has proven to be advantageous over older, centralised forms of data retrieval. The technique has become an industrial standard. Even the practice of filesharing itself has been incorporated in the marketing strategies of some content providers, including those who are pressing charges against individual filesharers (MediaDefender being the celebrity case). While filesharers and providers of filesharing services are fined or sent to prison, the innovations stemming from their (illegal) activities are greasing the wheels of the culture industry. By the same token, it is predictable that discoveries made by clandestine chemists and psychonauts will end up in the patent portfolio of pharmaceutical companies. It suffices to recall how methamphetamine cooking in the US has driven up over-the-counter sales of cold medicine (in which a key precursor for methamphetamine can be found, ehpedrine), far beyond what any known cold epidemic could account for (Redding, 2009). As for the psychonauts, the user-generated data bases with trip reports, dosages and adverse drug effects from the intake of novel psychodelic substances that have accumulated on the Internet over the last decades are currently being mined by pharmaceutical researchers. Thus is the psychonaut subculture enrolled in the drug discovery process of the pharmaceutical industry. The added benefit for the industry is that they have no liability towards their test subjects. In conclusion, the legal grey zone has become an incubator for innovation. In the same way as parts of the culture industry has become structrurally dependent on unwaged, volunteer labour by fans and hobbyists, the computer industry is structurally dependent on the illegal practices of filesharers and hackers, and the pharmaceutical industry on the psychonauts. The outlawing of their practices lay down a negative exchange rate of labour. The established practice of appropriating ideas from communities and political movements by rewarding key individiduals with access to venture capital is matched with a witheld threat of prison sentences. Concurrently, arbitrariness in the legal grey zone become the condition for labouring in the Schumpeterian-Shanzai innovation economy. References

|

15.08 18:00 OpenMirage – Hannes

|

|---|

|

Hannes Mehnert

Resilient and Decentralised Autonomous Infrastructure

|

Current SituationLarge companies run the Internet, and take advantage of the fact that most Internet users are not specialists. In exchange for well-designed frontends for common services those companies snitch personal data from the users, and by presenting users with long-winded terms of service they legalise their snitching. Companies are mainly interested in increasing their profit, and they will always provide data to investigators if the alternative is to switch off their service. Interlude: Originally the Internet was designed as decentralised communication network, and all participants were specialists who understood it, and could publish data. Nowadays, thanks to commercialisation users are presented with colourful frontends and only a minority understands the technology behind it. Since the revelations by whistleblowers the awareness of these facts is rising. People looking for alternatives turn to hosting providers run by autonomous collectives which are not interested in profit. These collectives are great, but unfortunately the collateral damage cannot be prevented if the investigators seize their equipment (as seen in the Lavabit case). While the collectives work very hard on the administrative side, they can barely invest time to develop new solutions from scratch. The configuring and administrative complexity to run your own services is huge. For example, in order to run your own mail server you have to, at least, have a protocol-level understanding of the domain name system (DNS), SMTP and IMAP. Furthermore, you need to be familiar with basic system administration tasks, know how a UNIX filesystem is organised, what are permissions, and keep up-to-date with security advisories. The complexity is based in the fact that general purpose operating systems and huge codebases are used even simple services. Best practises in system administration compartmentalise separate services into separate containers (either virtual machine, or lightweight approaches such as FreeBSD jails) to isolate the damage if one service has a security vulnerability. To overcome the complexity, make it feasible for people to run their own services, and decentralise our infrastructure, we either need to patch the existing tools and services with glue to make them ease to use for non-specialists, or we need to simplify the complete setup. The first approach is prone to errors, and increases the trusted code base. We focus on the latter approach. We will build an operating system from scratch, and think which user demands we can solve with technology. By doing so we can learn from the failures of current systems (both security and configuration wise). We will enable people to publish data on their own, without the need of a centralised server infrastructure. Furthermore, people get back control over their private data such as address book and emails. VisionAll your devices connect to each other using secure ways. Apart from the initial deployment of keypairs there is no configuration required. Your personal overlay network reuses existing Internet infrastructure. You can easily manage communication channels to other people. TechnologyCore technologyWe use Mirage OS1 [2, 3], a library operating system. Mirage executes OCaml applications directly as XEN guest operating systems, with no UNIX layer involved. Each service contains only the required libraries (e.g. a name server will not include a file system, user management, etc.). The usage of OCaml has immediate advantages: memory corruptions are mitigated at the language level, the static expressive type system catches problems at compile time, and modules allow compilation to either UNIX for development or XEN for deployment of the same source. Mirage OS is completely open source, under BSD/MIT licenses. The trusted code base of each service is easily two orders of magnitude smaller than common operating system kernels and libraries. PerformanceAs for performance, each service consists only of a single process, which means a single address space and no context switching between the kernel and userland, and received byte arrays are transferred to the respective service using zero-copy. Reduced configuration complexityInstead of using configuration files in a general purpose operating system with an ad-hoc syntax, the configuration is directly done in the application before it is compiled. Each service only requires a small amount of configuration, and most of the configuration can be checked at compile time. No further reconfiguration is required at run time. Updating services is straightforward: each service is contained in a virtual machine, which can be stopped and replaced; on-the-fly updates and testing of new versions are easily done by running both the old and the new service in parallel. Existing librariesLibraries already available for Mirage OS include a TCP/IP stack, a HTTP implementation, a distributed persistent branchable git-like store (Irmin2), a DNS implementation, a TLS implementation3 [6], and more. FutureOn top of Mirage OS we want to develop an authenticated and encrypted network between your devices, using DNSSEC and dnscurve for setting up the communication channels. Legacy UNIX devices will need a custom DNS resolver and a routing engine which sets up the encrypted channels. The routing engine will use various tactics: NAT punching, TOR hidden service, VPN, direct TLS connection, etc. In conclusion, the properties of our proposed network are:

Further futureIn the first stage only data will be decentralised, but the communication will still rely on the centralised DNS. Later on, this can be replaced by a distributed hash table or services such as GNUnet. About the AuthorHannes talked in 2005 at What the Hack (Dutch hacker camp) together with Andreas Bogk about Phasing Out UNIX before 2038-01-194, and implemented TCP/IP in Dylan [1, 5]. In 2013 he received a PhD in formal verification of the correctness imperative programs [4], during which he discovered that especially shared mutable state is tedious to reason about. He thus concluded to smash the state, one field at a time. Hannes works on reimplementing desired Internet protocols (e.g. TLS [6]) in a functional world, on top of Mirage OS [2, 3]. References

|

15.08 18:00 A socio-legal approach to hacking – Michael Dizon

|

|---|

|

Michael Anthony C. Dizon

Rules of a networked society

Here, there and everywhere

|

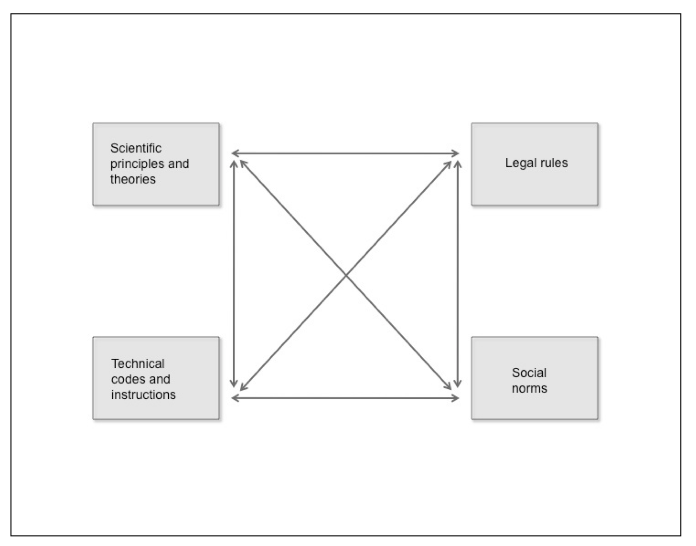

Impact of technological rules and actors on societyAt the height of the dot-com boom in the late 1990s, Lawrence Lessig expressed and popularized the idea that technical code regulates (Lessig 2006). Since then, together with rapid technological developments and widespread dissemination of technologies in all aspects of people’s lives, the growing influence on the behavior of people and things of technological rules and actors other than and beyond the law and the state has become all the more apparent. The behaviors of people online are to a certain extent determined by the technical protocols and standards adopted by the Internet Engineering Task Force (IETF) and other non-governmental bodies that design the architecture of the internet (see Murray 2007, 74; Bowrey 2005, 47). The Arab Spring has proven that the use of internet-based communications and technologies can enable or have a role in dramatic political and social change (Howard 2011; Stepanova 2012)1. Making full use of their technical proficiency, the people behind the whistleblower website Wikileaks and the hacktivist group Anonymous have become influential albeit controversial members of civil society who push the boundaries of what democracy, freedom and civil disobedience mean in a digital environment (Ludlow 2010; Hampson 2012, 512-513; Chadwick 2006, 114). Technology-related rules even had a hand in one of the most significant events of the new millennium, the Global Financial Crisis. It is claimed that misplaced reliance by financial institutions on computer models based on a mathematical algorithm (Gaussian copula function) was partly to blame for the miscalculation of risks that led to the crisis (Salmon 2009; MacKenzie&Spears 2012). The fact that a formula (i.e., a rule expressed in symbols) can affect the world in such a momentous way highlights the critical role of rules in a ‘networked information society’ (Cohen 2012, 3) or ‘network society’ (Castells 2001, 133). This paper argues that, in order to better understand how a networked society is organized and operates now and in the near future, it is important to develop and adopt a pluralist, rules-based approach to law and technology research. This means coming to terms with and seriously examining the plural legal and extra-legal rules, norms, codes, and principles that influence and govern behavior in a digital and computational universe (see Dyson 2012), as well as the persons and things that create or embody these rules. Adopting this rules-based framework to technology law research is valuable since, with the increasing informatization and technologization of society (Pertiera 2010, 16), multiple actors and rules – both near and far – do profoundly shape the world we live in. From ‘what things regulate’ to ‘what rules influence behavior’A pluralist and distributed approach to law and technology is not new (see Schiff Berman 2006; Hildebrandt 2008; Mifsud Bonnici 2007, 21-22 (‘mesh regulation’); Dizon 2011). Lessig’s theory of the four modalities of regulation (law, social norms, the market, and architecture) serves as the foundation and inspiration for many theories and conceptualizations about law, regulation and technology within and outside the field of technology law (Lessig 2006, 123; Dizon 2011). Modifying Lessig’s model, Murray and Scott (2002, 492) come up with what they call the four bases of regulation: hierarchy, competition, community, and design. Similarly, Morgan and Yeung (2007, 80) advance the five classes of regulatory instruments that control behavior, namely: command, competition, consensus, communication, and code. Lessig’s conception of “what things regulate” (Lessig 2006, 120) is indeed insightful and useful for thinking about law and technology in a networked society. I contend, however, that his theory can be remade and improved by: (1) modifying two of the modalities; (2) moving away from the predominantly instrumentalist concerns of ‘regulation’ and how people and things can be effectively steered and controlled to achieve stated ends (Morgan & Yeung 2007, 3-5; Black 2002, 23, 26); and (3) focusing more on how things actually influence behavior rather than just how they can be regulated. I prefer to use the term ‘technology’ rather than ‘architecture’ since the former is a broader concept that subsumes architecture and code within its scope. By technology, I mean the ‘application of knowledge to production from the material world. Technology involves the creation of material instruments (such as machines) used in human interaction with nature’ (Giddens 2009, 1135). The regulatory modality of ‘the market’ is slightly narrow in its scope since it pertains primarily to the results of people’s actions and interactions. This modality can be expanded to also cover the ‘naturaland social forces and occurrences’ (including economic ones) that are present in the physical and material world. In the same way that market forces have been the classic subject of law and economics, it makes sense for those studying law and technology issues to also examine other scientific phenomena. As will be explained in more detail below, social norms are distinguished from natural and social phenomena since the former are composed of prescriptive (ought) rules while the latter are expressed as descriptive (is) rules. Based on the above conceptual changes to Lessig’s theory, the four things that influence behavior are: (1) law, (2) norms, (3) technology, and (4) natural and social forces and occurrences. The four things that influence behavior can be further described and analyzed in terms of the rules that constitute them. From a rules-based perspective, law can be conceived of as being made up of legal rules, and norms are composed of specific social norms. On their part, technology consists of technical codes and instructions, while natural and social forces and occurrences are expressed in scientific principles and theories. By looking at what rules influence behavior, one can gain a more detailed, systematic and interconnected picture of who and what governs a networked society. Lessig’s well-known diagram of what constrains an actor can be reconfigured according to the four types of rules that influence behavior. The four rules of a networked society therefore are legal rules, social norms, technical codes, and scientific principles (see Figure 1).

Rules of a networked societyPlurality of rulesHow the various rules of a networked society relate to and interact with each other is very important to understanding how the informational and technological world works. Having a clear idea of how and why the rules are distinct yet connected to one another is paramount given that, in most cases, there are multiple overlaps, connections, intersections and even conflicts among these rules. More often than not, not one but many rules are present and impact behavior in any given situation. The presence of two or more rules or types of rules that influence behavior in a given situation gives rise to a condition of Legal rules and social normsLegal rules and social norms are both prescriptive types of rules. A social norm has been defined in a number ways: as ‘a statement made by a number of members of a group, not necessarily by all of them, that the members ought to behave in a certain way in certain circumstances’ (Opp 2001, 10714), as ‘a belief shared to some extent by members of a social unit as to what conduct ought to be in particular situations or circumstances’ (Gibbs 1966, 7), and as ‘generally accepted, sanctioned prescriptions for, or prohibitions against, others’ behavior, belief, or feeling, i.e. what others ought to do, believe, feel – or else’ (Morris 1956, 610). Dohrenwend proffers a more detailed definition:

From the above definitions, the attributes of social norms are: ‘(1) a collective evaluation of behavior in terms of what it ought to be; (2) a collective expectation as to what behavior will be; and/or(3) particular reactions to behavior, including attempts to apply sanctions or otherwise induce a particular kind of conduct’. (Gibbs 1965, 589) Legal rules and social norms have a close and symbiotic relationship. Cooter explains one of the basic dynamics of laws and norms, ‘law can grow from the bottom up by [building upon and] enforcing social norms’ (Cooter 1996, 947-948), but it can also influence social norms from the top down – “law may improve the situation by enforcing a beneficial social norm, suppressing a harmful social norm, or supplying a missing obligation” (ibid 1996, 949). Traditional legal theory has settled explanations of how laws and norms interact. Social norms can be transformed into legal norms or accorded legal status by the state through a number of ways: incorporation (social norms are transformed or codified into law by way of formal legislative or judicial processes), deference (the state recognizes social norms as facts and does not interfere with certain private transactions and private orderings), delegation (the state acknowledges acts of self-regulation of certain groups) (Michaels 2005, 1228, 1231, 1233 and 1234), and recognition (the state recognizes certain customary or religious norms as state law) (van der Hof & Stuurman 2006, 217). Technical codes and instructionsTechnical codes like computer programs consist of descriptive rather than prescriptive instructions. However, the value of focusing on rules of behavior is that the normative effects of technical codes can be fully recognized and appreciated. Despite the title of his seminal book and his famous pronouncement that ‘code is law’, Lessig (2006, 5) does not actually consider technical or computer code to be an actual category or form of law, and the statement is basically an acknowledgement of code’s law-like properties. As Leenes (2011, 145) points out, ‘Although Lessig states that ‘code is law’, he does not mean it in a literal sense’. Even Reidenberg’s earlier concept of Lex Informatica, which inspired Lessig, is law in name only (Lessig 2006, 5). Reidenberg explicitly states that Lex Informatica can be ‘a useful extra-legal instrument that may be used to achieve objectives that otherwise challenge conventional laws and attempts by government to regulate across jurisdictional lines’ (1997, 556 emphasis added). While Lex Informatica ‘has analogs for the key elements of a legal regime’, it is still ‘a parallel rule system’ that is ‘distinct from legal regulation’ (Reidenberg 1997, 569, 580 emphasis added). But if ‘code is not law’ as some legal scholars conclude (Dommering 2006, 11, 14), what exactly is the relationship between technical codes and law, and what is the significance of code in the shaping of behavior in a networked society? Using the above definition and characterization of norms, technical code in and of itself (i.e., excluding any such norms and values that an engineer or programmer intentionally or unintentionally ally implements or embodies in the instructions) is neither a legal rule nor a social norm because: (1) it is not a shared belief among the members of a unit; (2) there is no oughtness (must or should) or a sense of obligation or bindingness in the if-then statements of technical code (they are simply binary choices of on or off, true or false, is versus not); (3) there is no ‘or else’ element that proceeds from the threat of sanctions (or the promise of incentives) for not conforming (or conforming) with the norm; (4) the outcome of an if-then statement does not normally call for the imposition of external sanctions by an authority outside of the subject technology; (5) and, generally, there is no collective evaluation or expectation within the technical code itself of what the behavior ought to be or will be (a matter of is and not ought). Even though technical codes and instructions are not per se norms, they can undoubtedly have normative effects (van der Hof & Stuurman 2006, 218, 227). Furthermore, technologies are socially constructed and can embody various norms and values (Pinch & Bijker 1984, 404). A massively multiplayer online role-playing game (MMORPG) such as World of Warcraft has its own rules of play and can, to a certain extent, have normative effects on the persons playing it (they must abide by the game’s rules and mechanics). A virulent computer program such as the ‘ILOVEYOU’ virus/worm (or the Love Bug) that caused great damage to computers and IT systems around the world in 2000 can have a strong normative impact; it can change the outlooks and behaviors of various actors and entities (Cesare 2001, 145; Grossman 2000). As a result of the outbreak of the Love Bug, employees and private users were advised through their corporate computer policies or in public awareness campaigns not to open email attachments from untrustworthy sources. In the Philippines, where the alleged author of the Love Bug resided, the Philippine Congress finally enacted a long awaited Philippine Electronic Commerce Act, which contained provisions that criminalized hacking and the release of computer viruses4. The Love Bug, which is made up of technical instructions, definitely had a strong normative impact on computer use and security. Digital rights management (DRM) is an interesting illustration of the normative effects of technology since, in this case, technical code and legal rules act together to enforce certain rights for the benefit of content owners and limit what users can do with digital works and devices (see Brown 2006). DRM on a computer game, for example, can prevent users from installing or playing it on more than one device. Through the making of computer code, content owners are able to create and grant themselves what are essentially new and expanded rights over intellectual creations that go beyond the protections provided to them under intellectual property laws (van der Hof & Stuurman 2006, 215; McCullagh 2005). Moreover, supported by both international and national laws5, DRM acts as hybrid techno-legal rules that not only restrict the ability of users to access and use digital works wrapped in copy protection mechanisms, but the circumvention of DRM and the dissemination of information about circumvention techniques are subject to legal sanctions (see Dizon 2010).6 When users across the world play a computer game with ‘always-on’ DRM like Ubisoft’s Driver, it is tantamount to people’s behavior being subjected to a kind of transnational, technological control, which users have historically revolted against (Hutchinson 2012; Doctorow 2008). Another example of hybrid techno-legal rules is the so-called Great Firewall of China. This computer system that monitors and controls what users and computers within China can access and connect to online clearly has normative effects since it determines the actions and communications of an entire population (Karagiannopoulos 2012, 155). In fact, it does not only control what can be done within China but it also affects people and computers all over the world (e.g., it can prevent a Dutch blogger or a U.S. internet service such as YouTube from communicating with Chinese users and computers). In light of their far-reaching normative impact, technical codes and instructions should not be seen as mere instruments or tools of law (Dommering 2006, 13-14), but as a distinct type of rule in a networked society. Code deserves serious attention and careful consideration in its own right. Scientific principles and theoriesThere is a whole host of scientific principles, theories and rules from the natural, social and formal sciences that describe and explain various natural and social phenomena that influence the behavior of people and things. Some of these scientific principles are extremely relevant to understanding the inner workings of a networked society. For example, Moore’s Law is the observation-cumprediction of Intel’s co-founder Gordon Moore that ‘the number of transistors on a chip roughly doubles every two years’,7 and can be expressed in the mathematical formula n = 2((y - 1959) ÷ d) (Ce ruzi 2005, 585)8 Since 1965, this principle and the things that it represents have shaped and continue to profoundly influence all aspects of the computing industry and digital culture particularly what products and services are produced and what people can or cannot do with computers and electronic devices (Ceruzzi 2005, 586; Hammond 2004; see Anderson 2012, 73, 141). Ceruzzi rightly claims, ‘Moore’s law plays a significant role in determining the current place of technology in society’ (2005, 586). However, it is important to point out that Moore’s Law is not about physics; it is a self-fulfilling prophecy that is derived from ‘the confluence and aggregation of individuals’ expectations manifested in organizational and social systems which serve to self-reinforce the fulfillment of Moore's prediction’ for some doubling period (Hammond 2004, citations omitted)and is ‘an emergent property of the highly complex organization called the semiconductor industry’ (ibidem). This statement reveals an important aspect of Moore’s Law and other scientific principles and rules – that they are also subject to social construction. As Jasanoff eruditely explains:

Since the construction of scientific rules and facts is undertaken by both science and non-science institutions, “what finally counts as ‘science’ is influenced not only by the consensus views of scientists, but also by society’s views of what nature is like – views that may be conditioned in turn by deep-seated cultural biases about what nature should be like” (Jasanoff 1991, 347). Due to “the contingency and indeterminacy of knowledge, the multiplicity and non-linearity of ‘causes’, and the importance of the narrator’s (or the scientific claims-maker’s) social and cultural standpoint in presenting particular explanations as authoritative” (Jasanoff 1996, 411-412), science is without doubt a ‘deeply political’ and ‘deeply normative’ activity (Jasanoff 1996, 409, 413; see Geertz 1983, 189; Hoppe 1999). It reveals as much about us as it does the material world. The ‘constructedness’ (Jasanoff 1991, 349) of science can also been seen in the history of the use of and meaning ascribed to the term ‘scientific law’. The use of the term ‘law’ in reference to natural phenomena has been explained as ‘a metaphor of divine legislation”, which “combined the biblical ideas of God’s legislating for nature with the basic idea of physical regularities and quantitative rules of operation’ (Ruby 1986, 341, 342,358 (citations omitted). Ruby argues, however, that the origins of the use of the term law (lex) for scientific principles is not metaphorical but is inherently connected to the use and development of another term, rule (regula) (Ruby 1986, 347, 350). Through the changing uses and meanings of lex and regula and their descriptive and/or prescriptive application to the actions of both man and nature throughout the centuries, law in the field of science became more commonly used to designate a more fundamental or forceful type of rule, which nevertheless pertains to some ‘regularity’ in nature. At its core, a scientific principle or law is about imagining ‘nature as a set of intelligible, measurable, predictable regularities’ (Ruby 1986, 350 (emphasis added)). Another important characteristic of scientific principles is that they act as signs, and consist of both signifier and signified. Moore’s Law is both the expression and the embodiment of the natural and social forces and occurrences that it describes. As Ruby (1986, 347) explains, “in the case of natural phenomena it is not always possible to distinguish the use of lex for formulated principles from that for regularities in nature itself”. Thus, from a practical standpoint, natural and social phenomena are reified and can be referred to by the labels and formulations of the relevant scientific principles that they are known by. For example, rather than saying, ‘the forces described by Moore’s Law affected the computer industry’, it can be stated simply as ‘Moore’s Law affected the computer industry’. There is much that can be learned about how the world works if we take as seriously the influence of scientific rules on behavior as we do legal ones. Scientific principles are descriptive and not prescriptive rules, but, like technical codes, they too are socially constructed and have significant normative effects on society and technology, and are thus worth studying in earnest (see Jasanoff 1996, 397 ‘co-production of scientific and social order’). People who are aware of the descriptive ‘theory of the Long Tail’ (Anderson 2007, 52)9 would conform their actions to this rule and build businesses that answer the demands of niche markets. Scientists and engineers are obviously cognizant of the “law of gravity” and they know that they ought to design rockets and airplanes based on this important descriptive principle. Competition authorities know that they must take into account market forces and relevant economic principles before imposing prescriptive rules on a subject entity or market. These and many other examples show how descriptive rules can also give rise to or be the basis of ought actions and statements.10 Descriptive rules as such can influence behavior and have normative effects. Being able to incorporate scientific principles within the purview of law and technology research is important since it creates connections that bring the fields of law and science and technology ever closer together. If there is value in a sociologist of science and technology studying law-making processes (Latour 2010), there is equal merit in law and technology researchers examining scientific principles and technical codes since rules that govern the networked society can similarly be made and found in laboratories and workshops (Latour 2010; Callon 1987, 99). Significance of rulesOn a theoretical level, a rules-based approach is very useful and valuable to law and technology research in a number of ways. First, it distinguishes but does not discriminate between normative and descriptive rules. While the key distinction between is and ought is maintained, the role and impact of descriptive rules on behavior and order is not disregarded but is, in fact, fully taken into account. By focusing as well on descriptive rules and regularities that are not completely subject to human direction, a rules-based framework can complement and support the more instrumentalist, cybernetic and state-centric theories and methods to law and technology (Morgan & Yeung 2007, 3-5, Black 2002, 23, 26). Rather than concentrating solely or mainly on how state actors and the law directly or indirectly regulate behavior, a rules-based approach creates an awareness that problems and issues brought about by social and technological changes are often not completely solvable through man-made, top-down solutions alone, and more organic and bottom-up approaches should also be pursued. By placing equal emphasis on descriptive rules such as technical codes and scientific principles and their normative effects, the complexity and unpredictability of reality can be better understood, and the people, things and phenomena within and beyond our control are properly considered, addressed or, in some case, left alone. Second, conceptualizing the networked society in terms of is and ought rules makes evident the ‘duality of structure’ that recursively constitutes and shapes our world (Giddens 1984 25, 375). As Giddens explains,

Applying Giddens’ ‘theory of structuration’, a networked society is thus not constituted solely by one dimension to the exclusion of another – agency versus structure, human against machine, man versus nature, instrumentalism or technological determinism, society or technology – but it is the action-outcome of the mutual shaping of any or all of these dualities (Giddens 1984). Finally, a rule can be a key concept for an interdisciplinary approach to understanding law, technology and society. A rule can serve as a common concept, element or interface that connects and binds different academic fields and disciplines (Therborn 2002, 863). With the increasing convergence of different technologies and various fields of human activity (both inter and intra) and the multidisciplinary perspectives demanded of research today, a unifying concept can be theoretically and methodologically useful. The study of rules (particularly norms) has received serious attention from such diverse fields as law (see Posner 2000; Sunstein 1996; Lessig 1995; Cooter 2000), sociology (Hecter 2001), economics (McAdams & Rasmusen 2007; Posner 1997), game theory (Bicchieri 2006; Axelrod 1986), and even information theory (Boella et al. 2006; Floridi 2012). The study of ‘normative multiagent systems’ illustrates the interesting confluence of issues pertaining to law, technology and society under the rubric of rules (Boella et al. 2006; Savarimuthu & Cranefield 2011). Rules of hackingIn addition to its conceptual advantages, a rules-based approach can be readily applied to analyze real world legal and normative problems that arise from technical and social changes. There can be greater clarity in determining what issues are involved and what possible actions to take when one perceives the world as being ‘normatively full’ (Griffiths 2002, 34) and replete with rules. For instance, the ‘problem’ of computer hacking11 is one that legislators and other state actors have been struggling with ever since computers became widely used. Using the rules of a networked society as a framework for analysis, it becomes evident that hacking is not simply a problem to be solved but a complex, techno-social phenomenon that needs to be properly observed and understood. Laws on hackingEarly attempts to regulate hacking seemingly labored under the impression that the only rules that applied were legal rules. Thus, despite the absence of empirical data showing that hacking was an actual and serious threat to society, legislators around the world enacted computer fraud and misuse statutes that criminalized various acts of hacking, particularly unauthorized access to a computer (Hollinger 1991, 8). Some studies have shown, however, that these anti-hacking statutes have mostly been used against disloyal and disgruntled employees and only seldom in relation to anonymous outsiders who break into a company’s computer system, the oft-cited bogeyman of computer abuse laws (Hollinger 1991, 9; Skibbel 2003, 918). Not all laws though are opposed to all forms of hacking. The Software Directive upholds the rights to reverse engineer and to decompile computer programs to ensure interoperability subject to certain requirements12. The fair use doctrine and similar limitations to copyright provide users and developers with a bit of (but clearly not much) space and freedom to hack and innovate (see Rogers & Szamosszegi 2011). Norms of hackersAnother thing that state actors fail to consider when dealing with hacking is that computer hackers belong to a distinct culture with its own set of rules. Since the social norms and values of hackers are deeply held, the simple expedient of labeling hacking as illegal or deviant is not sufficient to deter hackers from engaging in these legally prohibited activities. In his book Hackers, Levy codified some of the most important norms and values that make up the hacker ethic:

These norms and values lie at the very heart of hacker culture and are a source from which hackers construct their identity. Therborn (2002, 869) explains the role of norms in identity formation, “This is not just a question of an ‘internalization’ of a norm, but above all a linking of our individual sense of self to the norm source. The latter then provides the meaning of our life”. While hacker norms have an obviously liberal and anti-establishment inclination, the main purposes of hacking are generally positive and socially acceptable (e.g., freedom of access, openness, freedom of expression, autonomy, equality, personal growth, and community development). It is not discounted that there are hackers who commit criminal acts and cause damage to property. However, the fear or belief that hacking is intrinsically malicious or destructive is not supported by hacker norms. In truth, many computer hackers adhere to the rule not to cause damage to property and to others (Levy 2010, 457). Among the original computer hackers in the Massachusetts Institute of Technology (MIT) and the many other types and groups of hackers, there is a ‘cultural taboo against malicious behavior’ (Williams 2002, 178). Even the world famous hacker Kevin Mitnick, who has been unfairly labeled as a ‘dark-side hacker’ (Hafner & Markoff 1991, 15), never hacked for financial or commercial gain (Mitnick & Siomon 2011; Hollinger 2001, 79; Coleman & Golub 2008, 266). It is not surprising then that the outlawing and demonization of hacking inflamed rather than suppressed the activities of hackers. After his arrest in 1986, a hacker who went by the pseudonym of The Mentor wrote a hacker manifesto that was published in Phrack, a magazine for hackers, and became a rallying call for the community.13 The so-called ‘hacker crackdown’ in 1990 (also known as Operation Sun Devil, where U.S. state and federal law enforcement agencies attempted to shut down rogue bulletin boards run by hackers that were allegedly “trading in stolen long distance telephone access codes and credit card numbers”) (Hollinger 2001, 79) had the unintended effect of spurring the formation of the Electronic Frontier Foundation, an organization of digital rights advocates (Sterling 1992, 12). Similarly, the suicide of a well-known hacker, Aaron Schwartz, who at the time of his death was being prosecuted by the US Justice Department for acts of hacktivism, has spurred a campaign to finally reform problematic and excessively harsh US anti hacking statutes that have been in force for decades.14 It may be argued that Levy’s book, which is considered by some to be the definitive account of hacker culture and its early history, was a response of the hacker community (with the assistance of a sympathetic journalist) to counteract the negative portrayal of hackers in the mass media and to set the record straight about the true meaning of hacking (Levy 2010, 456-457, 464; Sterling 1992, 57, 59; Coleman & Golub 2008, 255). Through Levy’s book and most especially his distillation of the hacker ethic, hackers were able to affirm their values and establish a sense of identity and community (Williams 2002, 177-178). According to Jordan and Taylor,

To illustrate the importance of Levy’s book as a statement for and about hacker culture, the wellknown German hacker group Chaos Computer Club uses the hacker ethic as their own standards of behavior (with a few additions).15 Technologies of hackingHackers do not only practice and live out their social norms and values, but the latter are embodied and upheld in the technologies and technical codes that hackers make and use. This is expected since hackers possess the technical means and expertise to route around, deflect or defeat the legal and extra-legal rules that challenge or undermine their norms. Sterling notes, “Devices, laws, or systems that forbid access, and the free spread of knowledge, are provocations that any free and self-respecting hacker should relentlessly attack” (1992, 66). The resort of hackers to technological workarounds is another reason why anti-hacking laws have not been very successful in deterring hackingactivities. Despite the legal prohibition against different forms of hacking, there is a whole arsenal of tools and techniques that are available to hackers for breaking and making things. There is not enough space in this paper to discuss in detail all of these hacker tools, but the following are some technologies that clearly manifest and advance hacker norms. Free and open source software (FOSS) is a prime example of value-laden hacker technology (Coleman & Golub 2008, 262). FOSS is a type of software program that is covered by a license that allows users and third party developers the rights to freely run, access, redistribute, and modify the program (especially its source code).16 FOSS such as Linux (computer operating system), Apache (web server software), MySQL (database software) WordPress (content management system), and Android (mobile operating system) are market leaders in their respective sectors and they exert a strong influence on the information technology industry as a whole. The freedoms or rights granted by FOSS licenses advance the ideals of free access to computers and freedom information, which arealso the first tenets of the hacker ethic. What is noteworthy about FOSS and its related licenses is that they too are a convergence of legal rules (copyright and contract law), social norms (hacker values), technical codes (software) and scientific principles (information theory) (Coleman 2009; Benkler 2006, 60). In order to grasp the full meaning and impact of FOSS on society, one mustengage with the attendant plurality of rules. Other noteworthy examples of hacking technologies that hackers use with higher socio-political purposes in mind are Pretty Good Privacy (PGP, an encryption program for secret and secure communications) (Coleman & Golub 2008, 259), BackTrack (security auditing software that includes penetration testing of computer systems), Low Orbit Ion Cannon (LOIC, network stress testing software that can also be used to perform denial-of-service attacks), and circumvention tools such as DeCSS (a computer program that can decrypt content that is protected by a technology protection measure). Technical codes are an important consideration in the governance of a networked society since “technology is not a means to an end for hackers, it is central to their sense of self – making and using technology is how hackers individually create and how they socially make and reproduce themselves” (Coleman & Golub 2008, 271). While technical codes are not themselves norms, they can embody norms and have normative effects. As such, technical codes too are essential to understanding normativity in a networked society. Science of hackingThe norms and normative effects of hacking tend to be supported and often magnified by scientific principles and theories. Hackers, for instance, can rely on Moore’s Law and the principle of ‘economies of scale’ (Lemley & McGowan 1998, 494) to plan for and develop technologies that are exponentially faster and cheaper, which can receive the widest distribution possible. Being cognizant of Schumpeter’s ‘process of creative destruction’ (Schumpeter 1962) and Christensen’s related ‘theory of disruptive innovation’ (Christensen 2006), hackers, as innovators and early adopters of technology, are in an ideal position to take advantage of these principles and create new technologies or popularize the use of technologies that can potentially challenge or upend established industries. Creative destruction is Schumpeter’s theory that capitalist society is subject to an evolutionary process that “incessantly revolutionizes the economic structure from within, incessantly destroying the old one, incessantly creating a new one” (Schumpeter 1962, 82). Schumpeter argues that today’s monopolists industries and oligopolistic actors will naturally and inevitably be destroyed and replaced as a result of competition from new technologies, new goods, new methods of production, or new forms of industrial organization (Schumpeter 1962, 82-83). The revolutionary Apple II personal computer, the widely used Linux open source operating system, the controversial BitTorrent file-sharing protocol, and the ubiquitous World Wide Web are some notable technologies developed by hackers,17 which through the process of creative destruction profoundly changed not just the economic but the legal, social and technological structures of the networked society as well. Furthermore, because of their proclivity for open standards, resources and platforms that anyone can freely use and build on, hackers can naturally benefit from principles of network theory such as network effects. According to Lemley, “Network effects” refers to a group of theories clustered around the question whether and to what extent standard economic theory must be altered in cases in which “the utility that a user derives from consumption of a good increases with the number of other agents consuming the good.” (Lemley & McGowan 1998, 483 (citations omitted)) This means that the more people use a technology, the greater the value they receive from it and the less likely they will use another competing technology. A consequence of network effects is a